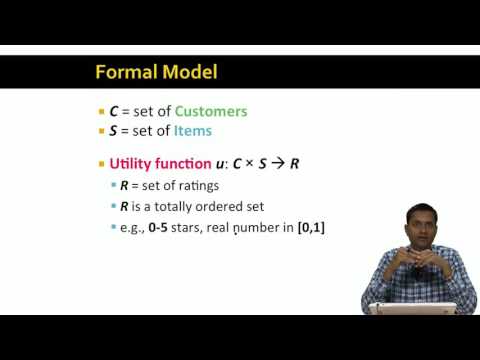

5 1 推薦系統概述 16 51 (5 1 Overview of Recommender Systems 16 51)

HaoLang Chen 發佈於 2021 年 01 月 14 日  沒有此條件下的單字

沒有此條件下的單字US /ˌɪndəˈvɪdʒuəl/

・

UK /ˌɪndɪˈvɪdʒuəl/

- n. (c.)個人;單個項目;個體;個人賽

- adj.個人的;獨特的;個別的;獨特的

US /fɪˈnɑməˌnɑn, -nən/

・

UK /fə'nɒmɪnən/

- v.t./i.出現;估計;我認為〜;認為

- n.身影;(計算過的)數量;肖像;圖;形狀;人物;名人;人影;數字

US /ˌrɛkəˈmɛnd/

・

UK /ˌrekə'mend/