字幕與單字

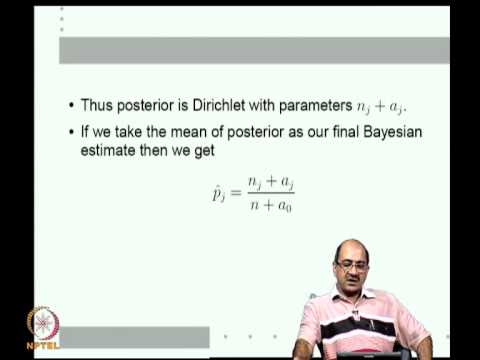

Mod-03 Lec-08 貝葉斯估計實例;密度的指數族和ML估計。 (Mod-03 Lec-08 Bayesian Estimation examples; the exponential family of densities and ML estimates)

00

aga 發佈於 2021 年 01 月 14 日收藏

影片單字

square

US /skwɛr/

・

UK /skweə(r)/

- n. (c./u.)正方形;面積;廣場

- adj.正直的;公正的;平方米

- adv.直截了當的;斷然的

- v.t.調整,改正;二次冪,平方;使挺直;使平正

A2 初級多益初級英檢

更多 使用能量

解鎖所有單字

解鎖發音、解釋及篩選功能