字幕與單字

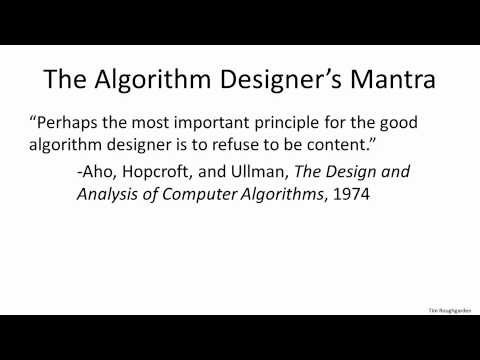

coursera - Design and Analysis of Algorithms I - 1.1 Introduction : Why Study Algorithms ? (coursera - Design and Analysis of Algorithms I - 1.1 Introduction : Why Study Algorithms ?)

00

Eating 發佈於 2021 年 01 月 14 日收藏

影片單字

使用能量

解鎖所有單字

解鎖發音、解釋及篩選功能