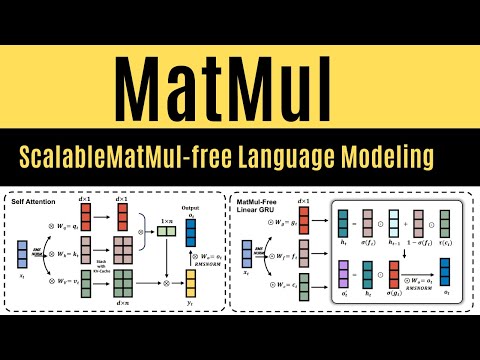

什麼是無 MatMul 語言建模 (What is MatMul-free Language Modeling)

a0912556613 發佈於 2024 年 06 月 24 日  沒有此條件下的單字

沒有此條件下的單字US /səˈner.i.oʊ/

・

UK /sɪˈnɑː.ri.əʊ/

US /ˈprɛznt/

・

UK /'preznt/

- adj.出席;在場的;目前的

- n.正在進行的;現在時態;目前的;禮物

- v.t.介紹;主持;介紹;展現;贈送

- v.i.出現

- n. (c./u.)通道;接近或使用的機會;訪問

- v.t.訪問

- v.t./i.存取(資料);訪問