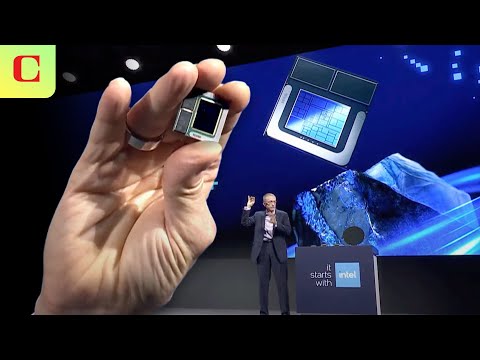

Intel 英特爾 AI 晶片發佈會,10 分鐘濃縮精華帶你看!(Intel's Lunar Lake AI Chip Event: Everything Revealed in 10 Minutes)

VoiceTube 發佈於 2024 年 06 月 06 日  沒有此條件下的單字

沒有此條件下的單字US /ɪˈkwɪvələnt/

・

UK /ɪˈkwɪvələnt/

US /ɪnˈkrɛdəbəl/

・

UK /ɪnˈkredəbl/

- adj.難以置信;偉大的;令人難以置信的;難以置信的

US /ɪn'gedʒ/

・

UK /ɪn'ɡeɪdʒ/

- v.t.交戰;交手;雇用;吸引;參與;從事;嚙合;承諾

US /ædˈvæntɪdʒ/

・

UK /əd'vɑ:ntɪdʒ/

- n. (c./u.)優勢;優點;利益

- v.t.利用;佔便宜