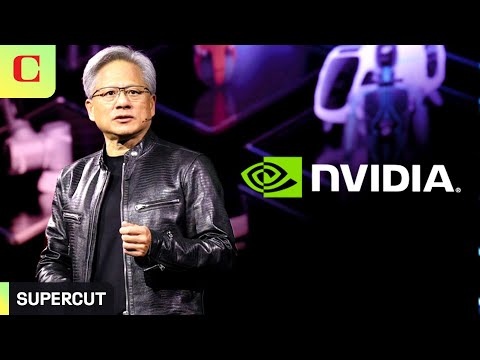

Nvidia 2024 年電腦展主題演講,15 分鐘濃縮精華!(Nvidia's 2024 Computex Keynote: Everything Revealed in 15 Minutes)

VoiceTube 發佈於 2024 年 06 月 06 日  沒有此條件下的單字

沒有此條件下的單字US /ɪnˈkrɛdəblɪ/

・

UK /ɪnˈkredəbli/

- adv.令人難以置信的是;難以置信地;非常地;令人難以置信地

US /ɪnˈkrɛdəbəl/

・

UK /ɪnˈkredəbl/

- adj.難以置信;偉大的;令人難以置信的;難以置信的

US /ˈprɑsˌɛs, ˈproˌsɛs/

・

UK /prə'ses/

- v.t.用電腦處理(資料);(依照規定程序)處理;處理;流程;加工;理解

- n. (c./u.)(規定的)程序;過程;進程;方法;法律程序;進程

US /ˈmʌltəpəl/

・

UK /ˈmʌltɪpl/

- adj.多重的;多種的;多發性的;多重的

- n. (c.)多;多個的;乘數

- pron.多重的