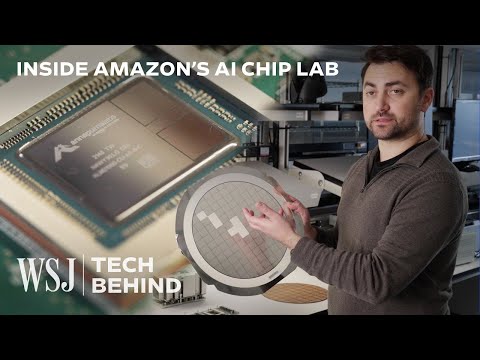

時下最夯的「AI」晶片是如何製作的?WSJ 帶你一探究竟! (Inside the Making of an AI Chip | WSJ Tech Behind)

沒有此條件下的單字

沒有此條件下的單字US /ˈprɑsˌɛs, ˈproˌsɛs/

・

UK /prə'ses/

- v.t.用電腦處理(資料);(依照規定程序)處理;處理;流程;加工;理解

- n. (c./u.)(規定的)程序;過程;進程;方法;法律程序;進程

US /ɪˈsɛnʃəl/

・

UK /ɪ'senʃl/

US /ˈtɪpɪklɪ/

・

UK /ˈtɪpɪkli/

US /ˈɪnstəns/

・

UK /'ɪnstəns/

- n. (c./u.)例證;實例;事件;實例 (電腦)

- v.t.例

- phr.應…的要求