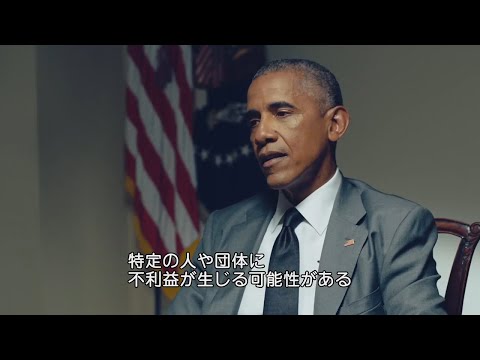

自律走行が走る世界では | バラク・オバマ×伊藤穰一 | Ep7 | WIRED.jp (自律走行車が走る世界では | バラク・オバマ×伊藤穰一 | Ep7 | WIRED.jp)

沒有此條件下的單字

沒有此條件下的單字US /ɪˈsenʃəli/

・

UK /ɪˈsenʃəli/

- adv.本質上 ; 本來 ; 實質上;本質上;實際上

- n. (c./u.)串;束;一群人

- v.t.使成一串

- v.t./i.打褶

US /ˈɪnˌstɪŋkt/

・

UK /'ɪnstɪŋkt/

- v.t./i.出現;估計;我認為〜;認為

- n.身影;(計算過的)數量;肖像;圖;形狀;人物;名人;人影;數字