第19次Leisercheche密碼漫步 (19. Leiserchess Codewalk)

沒有此條件下的單字

沒有此條件下的單字US /ɛnˈtaɪr/

・

UK /ɪn'taɪə(r)/

- adj.全體的 ; 完全的;未分割的;全緣的 (植物學)

US /ˈbesɪkəli,-kli/

・

UK /ˈbeɪsɪkli/

US /ˈɔdiəns/

・

UK /ˈɔ:diəns/

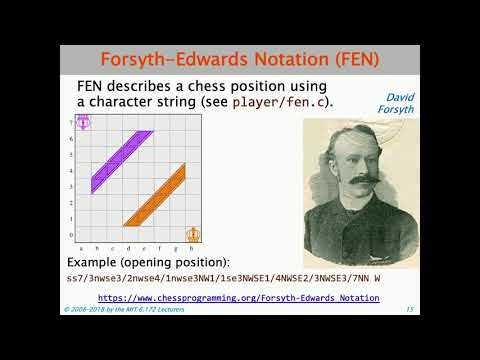

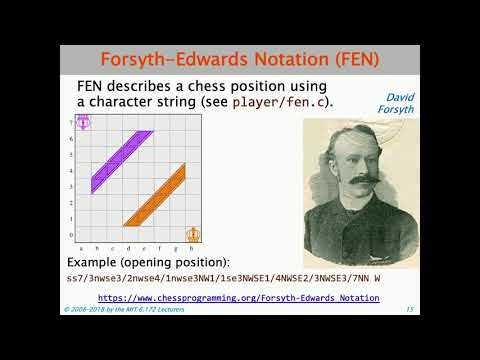

US /pəˈzɪʃən/

・

UK /pəˈzɪʃn/

- n. (c./u.)態度,觀點;位置;(團隊運動中個人所處的)位置;職位;處境;優勢

- v.t.定位;放置