字幕與單字

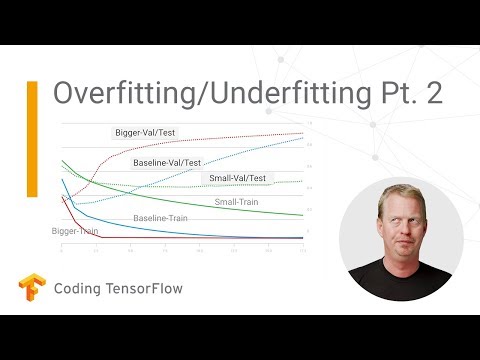

解決你的模型的過擬合和欠擬合問題--Pt.2(編碼TensorFlow)。 (Solve your model’s overfitting and underfitting problems - Pt.2 (Coding TensorFlow))

00

林宜悉 發佈於 2021 年 01 月 14 日收藏

影片單字

present

US /ˈprɛznt/

・

UK /'preznt/

- adj.出席;在場的;目前的

- n.正在進行的;現在時態;目前的;禮物

- v.t.介紹;主持;介紹;展現;贈送

- v.i.出現

A1 初級多益初級英檢

更多 使用能量

解鎖所有單字

解鎖發音、解釋及篩選功能